from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

browser = webdriver.Edge()

browser.get('https://www.szse.cn/disclosure/listed/fixed/index.html')

element = browser.find_element(By.ID, 'input_code')

element.send_keys('苏宁易购' + Keys.RETURN)

element = browser.find_element(By.ID, 'disclosure-table')

innerHTML = element.get_attribute('innerHTML')

f = open('innerHTML.html','w',encoding='utf-8')

f.write(innerHTML)

f.close()

browser.quit()

from bs4 import BeautifulSoup

import re

import pandas as pd

def to_pretty(fhtml):

f = open(fhtml,encoding='utf-8')

html = f.read()

f.close()

soup = BeautifulSoup(html,features="lxml")

html_prettified = soup.prettify()

f = open(fhtml[0:-5]+'-prettified.html', 'w', encoding='utf-8')

f.write(html_prettified)

f.close()

return(html_prettified)

html = to_pretty('innerHTML.html')

def txt_to_df(html):

p = re.compile('(.*?) ', re.DOTALL)

trs = p.findall(html)

p2 = re.compile('(.*?)', re.DOTALL)

tds = [p2.findall(tr) for tr in trs[1:]]

df = pd.DataFrame({'证券代码': [td[0] for td in tds],

'简称': [td[1] for td in tds],

'公告标题': [td[2] for td in tds],

'公告时间': [td[3] for td in tds]})

return(df)

df_txt = txt_to_df(html)

p_a = re.compile('>(.*?)<', re.DOTALL)

p_span = re.compile('>(.*?)<', re.DOTALL)

p_txt = re.compile('/(.*?)"',re.DOTALL)

get_code = lambda txt: p_a.search(txt).group(1).strip()

get_time = lambda txt: p_span.search(txt).group(1).strip()

get_txt = lambda txt: p_txt.search(txt).group(1).strip()

def get_data(df_txt):

prefix = 'https://disc.szse.cn/download/'

prefix_href = 'https://www.szse.cn/'

df = df_txt

codes = [get_code(td) for td in df['证券代码']]

short_names = [get_code(td) for td in df['简称']]

atts = [get_txt(td) for td in df['公告标题']]

times = [get_time(td) for td in df['公告时间']]

df = pd.DataFrame({'证券代码': codes,

'简称': short_names,

'attachpath': [prefix + att[0] for att in atts],

'公告时间': times

})

return(df)

df_data = get_data(df_txt)

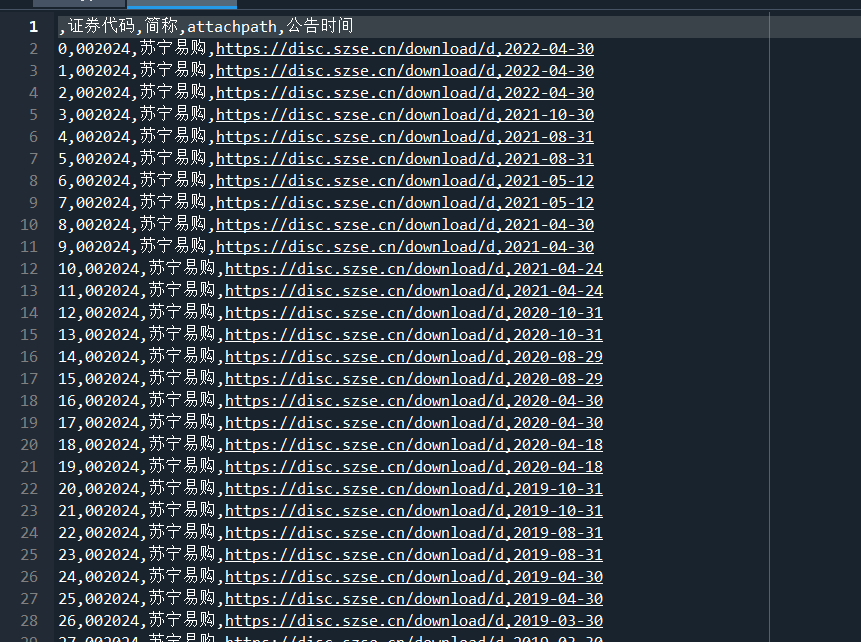

df_data.to_csv('data.csv')

先通过自动化爬取网页初始数据,再通过BeautifulSoup美化格式,最后通过正则表达式提取需要的数据。