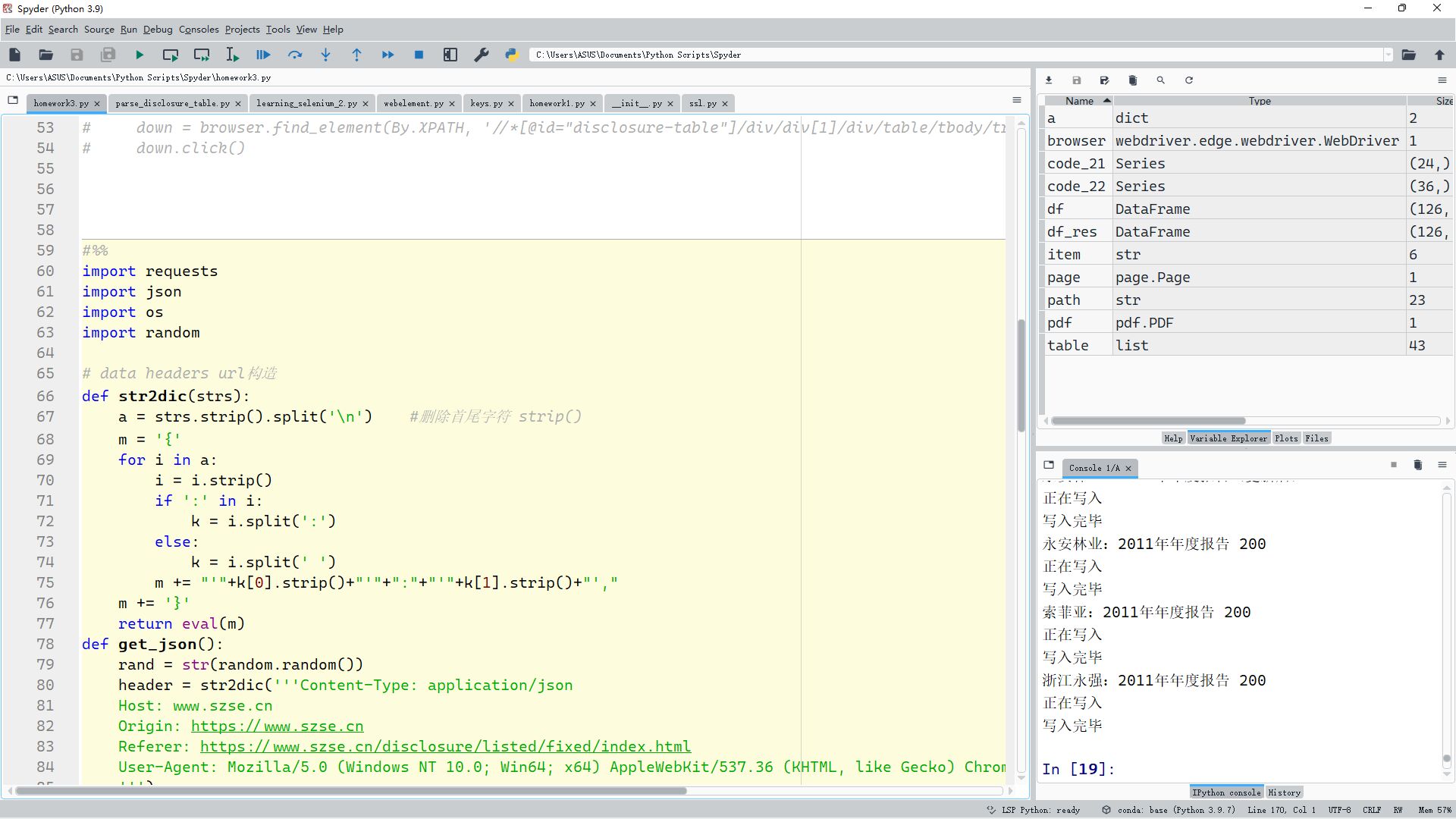

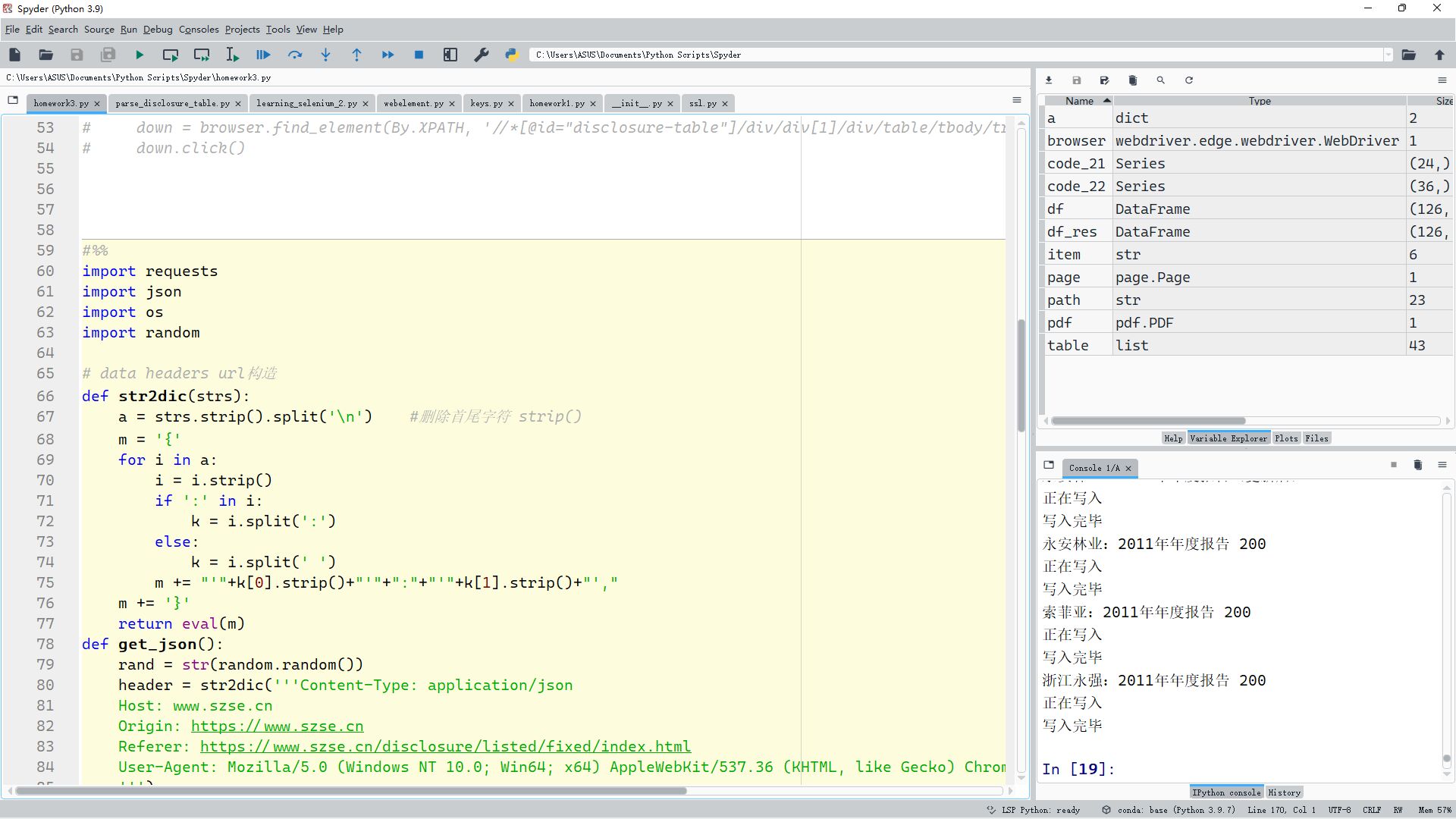

彭广威和倪泽江的小组作业3

代码

#%%

import requests

import json

import os

import random

# data headers url构造

def str2dic(strs):

a = strs.strip().split('\n') #删除首尾字符 strip()

m = '{'

for i in a:

i = i.strip()

if ':' in i:

k = i.split(':')

else:

k = i.split(' ')

m += "'"+k[0].strip()+"'"+":"+"'"+k[1].strip()+"',"

m += '}'

return eval(m)

def get_json():

rand = str(random.random())

header = str2dic('''Content-Type: application/json

Host: www.szse.cn

Origin: https://www.szse.cn

Referer: https://www.szse.cn/disclosure/listed/fixed/index.html

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.54 Safari/537.36 Edg/101.0.1210.39

''')

daima = '''

000663 永安林业

002489 浙江永强

002572 索菲亚

002751 易尚展示

002853 皮阿诺

300616 尚品宅配

300729 乐歌股份

300749 顶固集创

301061 匠心家居

603008 喜临门

603180 金牌厨柜

603208 江山欧派

603313 梦百合

603326 我乐家居

603389 亚振家居

603600 永艺股份

603610 麒盛科技

603661 恒林股份

603709 中源家居

603801 志邦家居

603816 顾家家居

603818 曲美家居

603833 欧派家居

603898 好莱客

'''

daima = str2dic(daima)

stockid = str(list(daima.keys())).replace("'", '"')

i = 1

data = json.loads('{"seDate":["2012-01-01","2022-5-12"],"stock":'+stockid+ #把str转化json模式

',"channelCode":["fixed_disc"],"bigCategoryId":["010301"],"pageSize":50,"pageNum":'+str(i)+'}')

url = 'https://www.szse.cn/api/disc/announcement/annList?'+rand

# request向主页请求,获得筛选数据信息

index = requests.post(url, json.dumps(data), headers=header) #json.dumps转化成字符串,data是字典

count = index.json()["announceCount"] #通过json该属性得知共有多少条数据,因为只请求了1页(50条),剩余的链接需要再构造

count = count//50+1 if count%50!=0 else count//50

data_j = index.json()

for i in range(2, count+1):

url = 'https://www.szse.cn/api/disc/announcement/annList?' + rand

data = json.loads(

'{"seDate":["2012-01-01","2022-5-12"],"stock":' + stockid +

',"channelCode":["fixed_disc"],"bigCategoryId":["010301"],"pageSize":50,"pageNum":' + str(i) + '}')

# 将得到的后续页数数据插入第一页的数据,方便统一处理

data_j['data'][-1:-1] = requests.post(url, data=json.dumps(data), headers=header).json()['data']

return data_j

# 构造下载链接

#该方法从json中提取所有年报pdf的链接

def get_url(data_j):

down_head = 'https://disc.szse.cn/download'

reports_url = []

all_d = data_j['data']

for report in all_d:

# 摘要和修改前的年报不提取

if '取消' in report['title'] or '摘要' in report['title']:

continue

# 文件名不能出现*号

reports_url.append((down_head+report['attachPath'], report['title'].replace('*', '')))

return reports_url

def reques_url(url):

# 年报链接挨个请求,并写入文件

if 'reports' not in os.listdir():

os.mkdir('reports')

path = 'reports/'+url[1]+'.pdf'

# 判断语句是为了支持断点续传

if path not in os.listdir('reports/'):

rep = requests.get(url[0])

print(url[1], rep.status_code)

with open(path, 'wb') as fp:

print('正在写入')

fp.write(rep.content)

print('写入完毕')

def main():

data_j = get_json()

reports_urls = get_url(data_j)

for url in reports_urls:

reques_url(url)

if __name__ == '__main__':

main()

结果

解释